The Live Caption feature has now been implemented in the Google Chrome browser. The feature was previously limited to their Pixel series of phones. XDA Developers first noticed the new feature.

The technology features machine learning as the way to generate spontaneous captions for any video or audio being watched. There is a lot of content that does not feature in-built captions on the internet. With this, a deaf or a partially deaf person can have a lot more access to the internet.

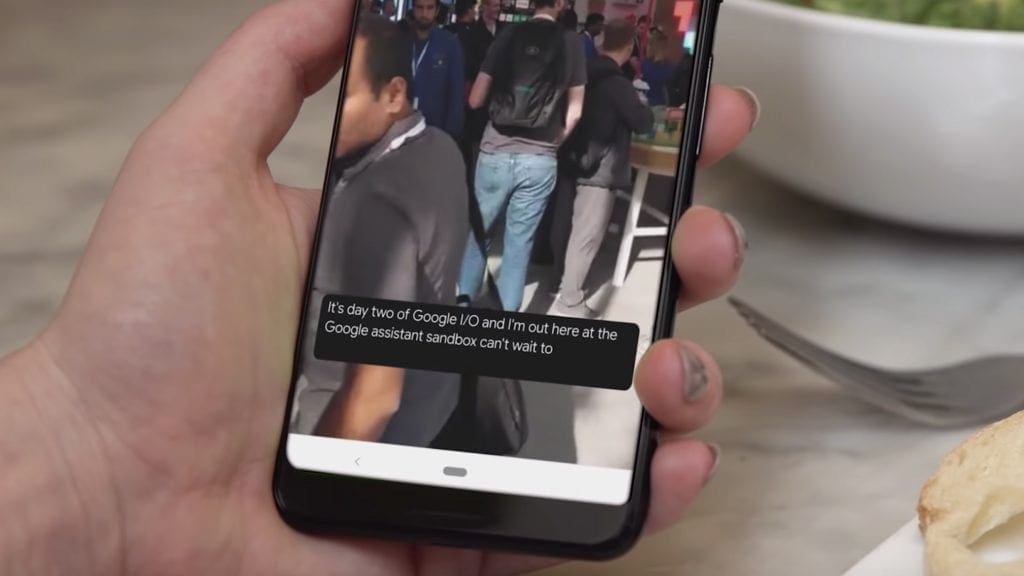

The captions appear at the bottom. The small box can be moved to fit the viewer’s experience. It automatically appears whenever there is audio being played. However, the automatic generation is not immediate and fast utterances or speech disturbances can give rise to mistakes. The technology is still pretty impressive and it works irrespective of the volume.

Google Chrome Finally Makes It Accessible

So far, the captions have worked for YouTube, Twitch, podcasts, and even SoundCloud. However, only English captions are available for now. The feature can be turned on by accessing the “Accessibility” option under the “Advanced” section. The feature is only available in the latest update of Google Chrome though so make sure the browser is up-to-date.

On being turned on, there will be a few additional downloads performed by Google Chrome. They are the new files that support speech recognition which is required for the feature. After that, the next time a video is played, or anything that has audio, captions should start appearing at the bottom.

Google Live Captions was first seen as a part of the beta of the mobile OS Android Q. However, only the high-end phones of the Pixel series and the Samsung series had the full implementation. With the recent update, the feature will have a much larger usage because of the popularity of Google Chrome.